With media budgets under increasing scrutiny and traditional attribution losing credibility amid privacy changes, brands are turning to incrementality testing to answer a fundamental question: is this marketing actually driving new sales, or taking credit for sales that would have happened anyway?

Increasingly, the most reliable way to answer that question is geo-based incrementality testing using a synthetic control methodology. Synthetic control has emerged as the gold standard because it produces results that are statistically rigorous, transparent, and defensible when real dollars are on the line.

That said, synthetic control isn’t the only measurement approach available. Incrementality can be measured in several ways, each with its own assumptions, confidence levels, and tradeoffs. Understanding those options and where they fall short can help clarify why synthetic control stands apart.

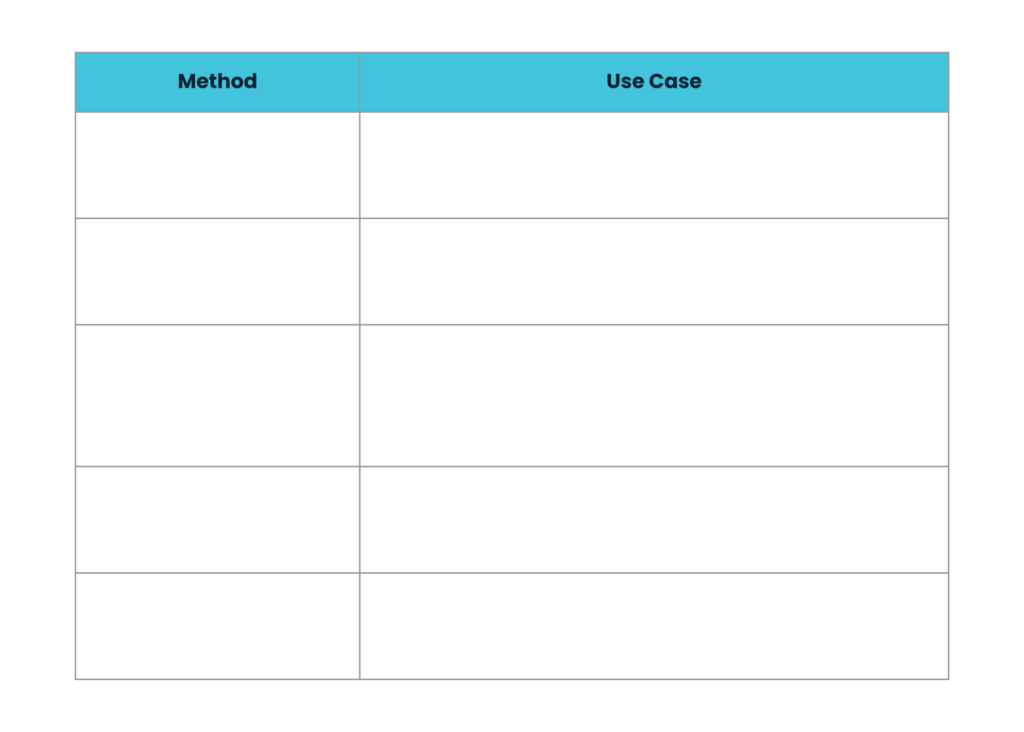

Below is an overview of the major methodologies, when each makes sense, and why synthetic control consistently delivers the strongest foundation for decision-making:

- Why Synthetic Control Leads

At its core, incrementality testing depends on comparison. The challenge is finding a comparison that’s actually valid.

Traditional geo testing asks you to pick a control market and hope it behaves like your test market would have if nothing changed. Synthetic control removes that guesswork.

Instead of relying on a single comparison market, synthetic control builds a custom counterfactual by blending data from many markets. The algorithm identifies the weighted combination of markets that most closely mirrors the test market’s historical behavior, effectively creating a “synthetic” version of that market.

A simple analogy: imagine you want to know whether a new pair of running shoes makes a sprinter faster. Comparing her to one other runner isn’t fair as everyone has a different baseline. But if you could mathematically blend ten runners to create a near-perfect match to her historical speed, any improvement after switching shoes becomes far more credible.

That’s exactly what synthetic control does for markets.

Instead of comparing Dallas to Houston and hoping they’re similar enough, you compare Dallas to a “synthetic Dallas” designed to behave exactly like Dallas would have without the media change. Any post-test difference can be attributed to the intervention with much higher confidence.

In practice:

Say you want to test whether YouTube is driving incremental revenue or simply capturing demand that would have converted anyway. You pause YouTube in three markets – Denver, Phoenix, and Portland – for six weeks.

Rather than selecting a few “similar” control markets, synthetic control builds a tailored counterfactual for each test market using weighted combinations of your remaining 40+ markets. Denver’s synthetic twin might be composed of 15% Minneapolis, 25% Seattle, 20% Atlanta, and so on, based on historical alignment.

If Denver’s synthetic version is projected to generate $2M in revenue during the test window, but actual Denver generates $1.7M without YouTube, you now have a defensible estimate of YouTube’s $300K incremental contribution in that market.

These results come with confidence ranges, full methodological transparency, and outputs that can feed directly into media mix models and forecasting tools. There’s no black box, and no hand-waving when leadership asks how the comparison was built.

This rigor is what makes synthetic control the benchmark, especially when testing will inform long-term investment decisions or recurrent measurement programs. - How Other Incrementality Methods Fit In

While synthetic control offers the strongest foundation, other approaches still have value when used appropriately.- Platform Lift Studies

Meta, Google, and TikTok offer built-in lift studies that compare exposed and unexposed users within their platforms. These tests are easy to deploy and deliver fast results, making them useful for quick directional insights.

However, true isolation is difficult. Users in control groups may still encounter the brand elsewhere, share devices, or be influenced indirectly. Results are also constrained to platform-tracked conversions rather than total business outcomes.

Platform lift studies are helpful for tactical readouts, but they’re often less defensible than broader measurement approaches when budgets are under scrutiny. - Matched Market Testing

Matched market testing introduces geographic separation, comparing performance between similar cities or regions where media is turned on versus off.

This approach is a solid entry point for brands new to geo-based testing. The limitation is that no two markets are ever truly identical. Differences in competition, local economics, and consumer behavior introduce noise that can weaken confidence in the results.

Synthetic control was developed specifically to overcome this limitation. - Time Series Methods

Time series approaches compare a market’s performance before and after a media change, estimating what would have happened based on historical trends.

Simple models rely on past performance alone, while more advanced Bayesian structural time series (BSTS) models incorporate seasonality, trends, and even performance in other markets to improve predictions.

These methods are especially useful when geographic holdouts aren’t possible, such as national TV buys, or when analyzing changes retroactively. The tradeoff is that you’re comparing against a modeled prediction rather than an actual control, which can be harder to defend in high-stakes scenarios. - Difference-in-Differences

Difference-in-differences compares the change in outcomes for test markets versus control markets over the same period. It’s particularly useful when media changes occur organically rather than through a planned experiment.

The key assumption is that both groups were trending similarly prior to the change. If that assumption doesn’t hold, results can be misleading without obvious warning signs.

- Platform Lift Studies

- Choosing the Right Tool

The best measurement programs know when to deploy each approach:

Each methodology has a role. But when you’re defending a major budget decision, evaluating whether to scale a significant investment, or building a measurement program meant to guide strategy over time, synthetic control provides the rigor that holds up under scrutiny.

As the bar for proving marketing impact continues to rise, synthetic control is how leading brands clear it, especially when results are validated across repeated tests and complementary methodologies.